Ma (間): What Japanese Philosophy Taught Me About Cybersecurity

The most devastating cyberattacks flow through spaces we never knew existed, bypassing our most carefully constructed defenses entirely. Now, bear with me here. After over 25 years in technology, I’ve started seeing our security blind spots differently. This shift in thinking came from an unexpected place: years of struggling with Japanese and diving into Eastern philosophy.

Ten years of study, countless hours with textbooks and private tutors, and yet fluency has always eluded me. The Japanese language defeated me in the end, I know many words, and I can understand basic sentences, but the deeper structures remain out of my reach. Yet, this struggle combined with my fascination for Eastern philosophy, has given me an appreciation for concepts that have no English equivalent, ways of thinking that our language doesn’t quite capture. Among them, ma (間), which stands out as particularly relevant to my work in cybersecurity.

In Japanese, ma represents the purposeful space between objects, the pause between notes, or the silence that gives meaning to sound. Western thought typically sees empty space as absence or nothingness, but Ma recognizes it as presence of a different kind. This distinction could transform how we approach cybersecurity, particularly in defending against social engineering and understanding the full chain of compromise.

Our current security models excel at fortifying each discrete stage. We monitor for reconnaissance, block malware delivery, prevent exploitation, yet attackers consistently thwart our defenses by operating in the spaces between these stages, exploiting the transitions between our controls (the relationship space). Understanding ma offers a way to make these seemingly invisible spaces visible, and to architect security for the intervals where our traditional defenses don’t typically reside.

A targeted phishing campaign begins with LinkedIn research (reconnaissance), but the email arrives on Friday afternoon when employees are mentally transitioning to weekend mode, or a Monday morning when inbox overwhelm creates cognitive overload. This temporal ma (the intentional use of time-based spaces and transitions) gives attackers an advantage. The message references a real project the target is working on (intel from reconnaissance) but asks for feedback on a document; shifting from the known to unknown. The attacker has moved through multiple stages simultaneously, existing in the spaces between them rather than progressing through them, one by one, as with the cyber kill chain.

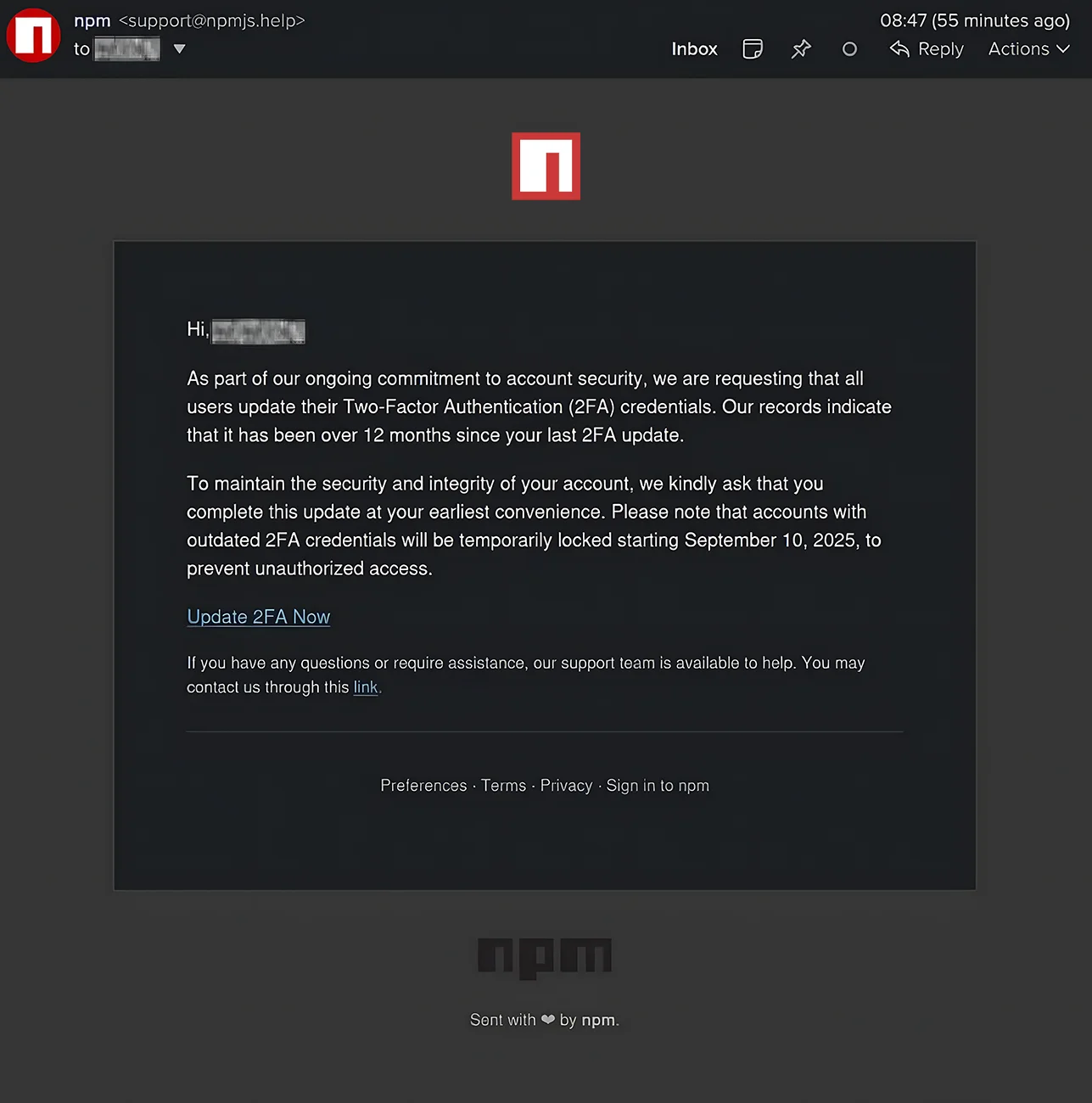

Another example emerges from the recent NPM supply chain attack that compromised package maintainer Josh Junon’s account. The phishing email came from [email protected], a domain very similar to the legitimate npmjs.com. The entire attack hinged on that split-second transition where the brain sees “npmjs” and autocompletes trust before noticing the unusual TLD. Had this email arrived at an alias specifically created for NPM communications rather than a primary email address, the violation of expected ma might have been immediately apparent. I’ll talk more about this in a moment, but you’ll see that an email from NPM arriving at an address never associated with NPM breaks the expected relationship, making the attack more visible through violated boundaries, rather than technical indicators alone.

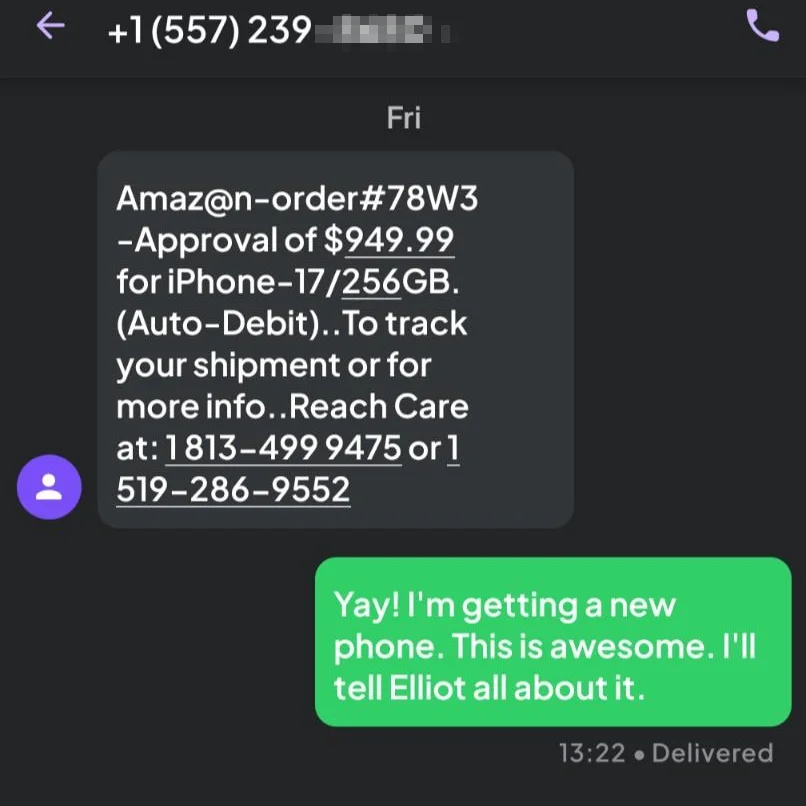

The power of this approach was demonstrated beautifully just a few days ago, when I received this SMS about an iPhone 17 order I never placed. The attackers exploited temporal ma brilliantly, sending these messages when many people are actively ordering new iPhones. Although I might add, they couldn’t do their reconnaissance because this was sent to a virtual phone number that isn’t associated with my real name. Despite this, the scam instantly revealed itself because it arrived at my virtual phone number that has no relationship with Apple. The message existed in the wrong identity space. Imagine your significant other sending you a message to say they’re out walking the dog, but you don’t have a dog! This boundary violation transforms from a moment of trust to an immediate recognition of suspicion.

The Space Where Social Engineering Lives

Social engineering devastates organizations despite them having mature security programs because it operates in spaces our controls rarely address. Research suggests that 68% of breaches involve the human element, yet our defenses focus almost exclusively on technical controls. When someone receives a phone call from “IT support,” several cognitive shifts occur. The recipient moves from their current work context to a help-seeking mode. They transition from stranger-caution to colleague-cooperation. Finally, they shift from data guardian to problem solver. Each of these transitions happens in quick succession, in subconscious awareness.

Traditional security training tells people to “never give passwords over the phone,” or “never trust, always verify” in the case of Zero-Trust. These rules target stages while ignoring the transitions between positions where actual decisions occur. Ma changes the entire approach by making these transitions conscious and giving them presence of mind. We give them time and space to exist.

Pretexting

Consider the moment when an email shifts from providing information to making a request for action. Most phishing succeeds in that exact transition. The attacker establishes context, builds credibility, creates urgency, then slides the request through while the recipient is still in information-receiving mode. This is pretexting in action; the creation of a fabricated scenario that feels real enough to bypass our analytical thinking. By the time conscious evaluation kicks in, the click has already happened.

Just as a Japanese garden uses empty space to frame and define planted areas, security practices would create intentional pauses at these cognitive shift points, making the shift visible through mindful transitions.

When someone requests sensitive information, we need to establish space by pausing before responding. “Let me finish what I’m working on and call you back in five minutes.” This creates space where your cognitive state can properly adjust. The attacker relies on momentum, on keeping you in that liminal space where social reflexes override security awareness. The pause breaks their rhythm without breaking human connection. This reframes the entire defense model.

I personally believe that current security awareness training creates paranoid employees who either suspect everyone or, more commonly, abandon impossible vigilance and trust everyone.

Reconnaissance and the Architecture of Information Relationships

Organizations typically view reconnaissance as information gathering. Attackers scanning networks, searching social media, mapping organizational structures. We defend by limiting information exposure, monitoring for scanning activity, hiding technical details. However, this is only a partial view, and it leads to a misunderstanding of what reconnaissance actually accomplishes.

Reconnaissance transforms raw data into exploitable intelligence by creating relationships between isolated facts. For example, learning that Jennifer works in accounting is data, a single fact without context. Now, if Jennifer posts vacation photos on Instagram, and her name appears on next week’s conference speaker list, this is information, processed data with temporal and contextual meaning. When an attacker connects these pieces, they create a relationship: Jennifer will be unreachable during a specific window, making her the perfect person to impersonate. “Jennifer asked me to handle this while she’s at the conference” becomes believable precisely because both facts are true, even though the relationship between them is fabricated. This becomes the foundation of the attack.

Our current defensive approaches try to limit information availability, though most organizations fail to implement even basic protections. This is precisely what the OSINT Defense and Security Framework aims to address; the systematic protection of organizational information that is exposed as open-source intelligence (OSINT). Most organizations already have a massive exposure they don’t even know about (what I call the breach before the breach).

Organizations could deliberately craft their public information to create false relationships. Job postings that accurately describe roles but create misleading technical contexts. Social media presences that are authentic but create temporal gaps that confuse reconnaissance timelines. Conference attendance could be announced strategically, such as posting about attendance after returning rather than before, eliminating the window where attackers know exactly where someone is and can send malicious “thank you” packages laden with malware or tracking devices to hotels or send targeted phishing as “conference follow-ups.”

This approach recognizes that information always exists in relationship, and we can architect those relationships as deliberately as we architect our network perimeters. The space between facts becomes a security control.

The Other Side of Weaponization: Recovery

Most security frameworks end at “actions on objectives” as if cyberattacks conclude when data is stolen or systems are ransomed. There’s a critical space after weaponization that organizations rarely consider until forced to face it. The space where recovery lives, and this deserves as much attention as prevention.

When ransomware succeeds, as it too often does, organizations enter a space where they must tackle multiple realities simultaneously. The Schrödinger’s cat of ransomware perhaps!

Pre-attack infrastructure persists in backups and documentation, compromised systems exist in their encrypted or damaged form, and the recovery environment begins taking shape through restoration efforts.

- Trust relationships between systems require reconfiguration at the technical level.

- Customer relationships demand transparency about data exposure and service disruption.

- Internal team dynamics reorganize around incident response roles, with accountability and coordination replacing normal hierarchies.

Who makes decisions in this space? How do communication patterns change? What relationships need to exist between normally separated systems or teams?

Practical Implementation of 間

Implementing this new philisophical way of thinking in cybersecurity means adding a relational dimension to current frameworks. Where we currently see stages, we now need to see relationships.

As we learn from the concept of ma, it can also reveal critical gaps in our identity and segmentation strategies. We meticulously segment networks, implement role-based access controls, and enforce password rotations, yet we undermine all of this by relying on single points of identity (typically a username/email address and even a phone number). When a password reset email arrives at my primary email address for a service that should have its own unique alias, that should trigger immediate suspicion.

Consider how this changes our security posture. A SIM swap attack fails because the attacker won’t know which phone number handles recovery for each service, we’ve created space between different aspects of our digital identity. Even if they do know the phone number, if we implement a well-designed VoIP system, there will be no cell phone carrier to socially engineer and carry out the SIM swap attack. By creating separate communication channels for different services, we establish boundaries that make malicious attempts more obvious.

For social engineering defense, implementation might include:

Training

Training focused on transition recognition that teaches employees to feel when they're shifting cognitive states. That moment when an email moves from informational to transactional. When a conversation shifts from general to specific. When someone invokes authority or urgency. These moments deserve attention because they're transitions, and transitions determine outcomes.

Communication

Communication protocols that build legitimate ma into sensitive processes. Password resets should always include a built-in pause. Information requests that automatically trigger a callback protocol. Architecture that creates space for cognitive transitions to complete.

Awareness

Security awareness that values the space between mental models as much as the models themselves, teaching employees to recognize state changes and give those movements appropriate time and attention.

For OSINT defense, implementation involves:

Information

Information architecture that deliberately crafts relationship spaces by mapping how public information relates and identifying which relationships between facts create vulnerability. Alternative relationships can maintain truth while changing meaning.

Defensive OSINT

Defensive OSINT, the practice of viewing your organization through an attacker's eyes to identify and mitigate vulnerabilities, becomes essential here. The OSINT Defense and Security Framework specifically addresses how attackers map relationships between collected data points. Monitoring for reconnaissance activity means tracking not just what information attackers gather but the connections they're making. These relationship assumptions reveal where attackers expect to find exploitable ma.

Strategy

Strategic disclosure that uses information release to create defensive ma through accurate information that creates temporal gaps, false patterns, or misleading relationships. The space between facts becomes defensive terrain.

For post-weaponization recovery, implementation requires:

Recovery

Recovery architecture that plans for the relationship space after compromise with emphasis on relational recovery beyond technical restoration. This includes implementing immutable backups that attackers cannot corrupt, separating backup authentication from production domains so a domain compromise doesn't equal backup compromise, and establishing isolated recovery environments. Trust rebuilding, team coordination changes, and temporary relationships all need advance consideration.

Timing

Recovery requires deliberate pacing. The rush to restore systems often reintroduces the same vulnerabilities that enabled the attack. Organizations should build intentional pauses into their recovery process. Assessment before restoration. Verification before reconnection. Each pause allows conscious reconstruction rather than reflexive rebuilding.

Relationship

Documentation that maps not just systems and processes, but the relationships between them. When rebuilding after compromise, you need to know more than what existed. You need to understand how everything connected, why those connections mattered, and which relationships created vulnerability.

The Cognitive Dimension of Security Architecture

If effective cybersecurity strategies must exist in the relationship between both technical and human elements, then current security architecture needs to treat both human and technical as separate domains (bridged by policies and training).

Research by Daniel Kahneman has identified that our brains operate on two processing systems. System 1 is fast, automatic, intuitive. System 2 is slow, deliberate, analytical. Social engineering specifically targets System 1 while keeping System 2 occupied or offline. When someone calls during a busy workday claiming there’s an urgent IT issue, they’re deliberately attacking when your System 2 is already overwhelmed. Your brain defaults to System 1 patterns.

This is exactly what happened in Josh Junon’s phishing attack. You can see an interview where he discusses this here: https://www.youtube.com/watch?v=2nDLJyPVGMk. In Josh’s case, the space between receiving the email and taking action was essentially compressed to an instant.

-

Authority triggers bypass critical thinking through “click-whirr” responses, automatic compliance patterns that organizations and social conditioning unknowingly train into us. When someone says “the CEO needs this,” we often respond before thinking.

-

Reciprocity weaponizes our hardwired need to repay favors. An attacker who first provides helpful information creates an obligation in the space between receiving and giving, making refusal feel wrong even when suspicious.

-

Urgency eliminates the pauses where judgment lives. “Your account will be locked on Septemeber 10, 2025” as in the NPM attack, shuts down our analytical brain entirely, leaving only reflexive action.

-

Social proof exploits our deep need for tribal alignment. “Everyone else in your department has already completed this” collapses the space between individual judgment and group belonging, making resistance feel like isolation.

Most insidiously, cognitive dissonance makes us justify small compromises, becoming complicit in our own breach.

Conclusion: The Architecture of Invisible Security

In cybersecurity industry, we’ve built elaborate fortresses to defend each stage of attack, yet adversaries flow through our defenses like water, And we wake up each day with news of another devastating ransomware attack. Gartner reports global spend on cybersecurity is at $213 billion for 2025 and this is expected to rise even further.

Ma (間) isn’t a complete answer, but maybe there’s value in considering these gaps, this space in between, as part of our security mindset. It’s clear that what we’ve been doing for the last two decades just isn’t working. Mine is just one more perspective among many, though, this approach doesn’t require new technology or bigger budgets, which at least makes it worth considering.

The next time you receive an unexpected request, you might notice the space before you respond. That pause, that 間, could be worth exploring!

UPDATE - Septemeber 23, 2025:

Even as I write this article, the supply chain attacks keep evolving, and in this case attackers continue demonstrating their intuitive mastery of Ma, perhaps without even realizing it. The recently discovered FezBox npm package perfectly illustrates how threat actors take advantage of temporal spaces with their attacks. After installation, the malware waits exactly 2 minutes before executing its payload that steals browser credentials via an embedded QR code.

This delay reveals sophisticated understanding of cognitive transitions. The code first verifies it’s running in a production environment (not a development sandbox), then initiates its wait. During the 2 minutes, several critical transitions occur. The developer who just installed the package has moved their attention elsewhere. Security tools that monitor npm install operations have completed their scans and found nothing suspicious. The mental state of “installing new dependency” with its heightened vigilance has shifted to “back to coding”. I don’t know about you but when I’m focused on coding I’m in the zone, and not thinking about vigilance.

What makes this particularly clever is how the delay transforms the attack surface. Traditional static analysis scans the package at installation and finds utility functions and a QR code module, nothing obviously malicious. By the time the actual credential theft occurs (2 minutes later), there’s no connection to the installation event. The ma between installation and execution becomes the attack itself.